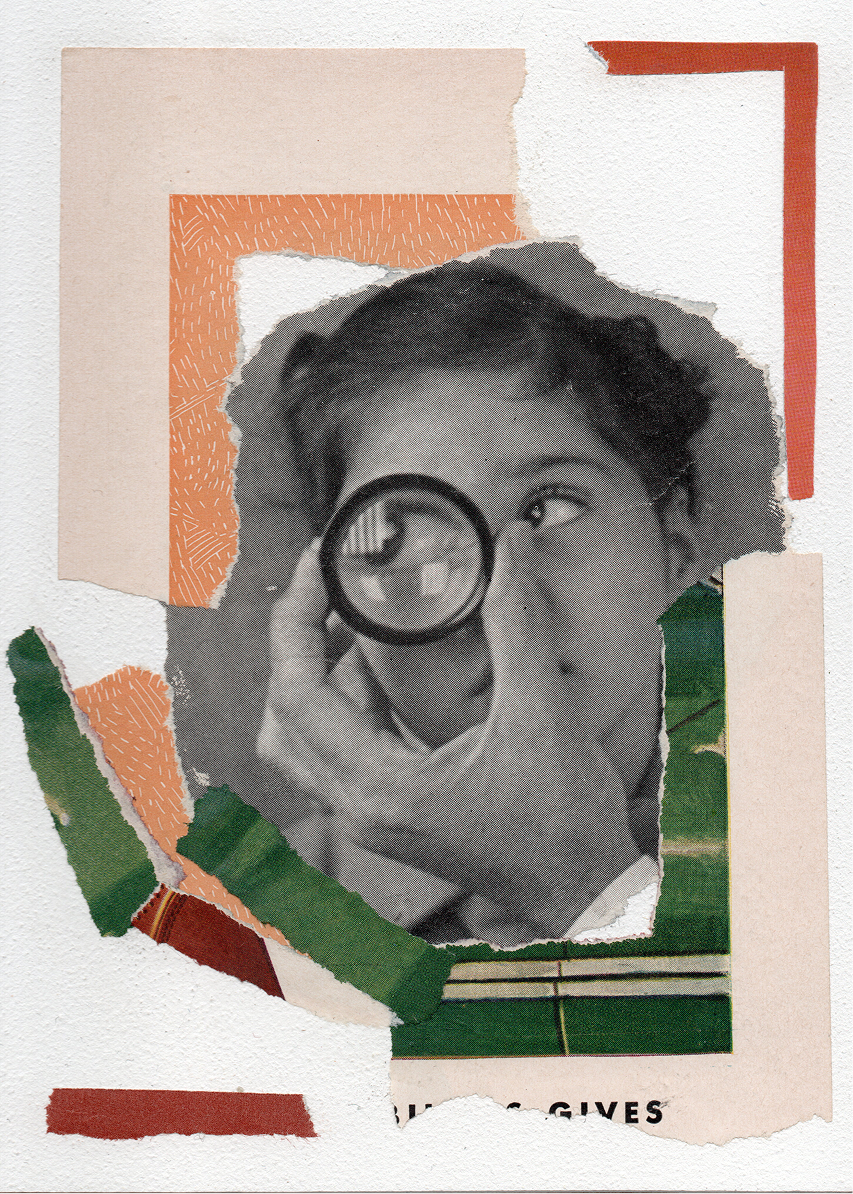

A few collages from Life magazine and photos

Collage with a life magazine and miscellaneous photos

All from the September 2, 1940 issue of Life, and various other sources.

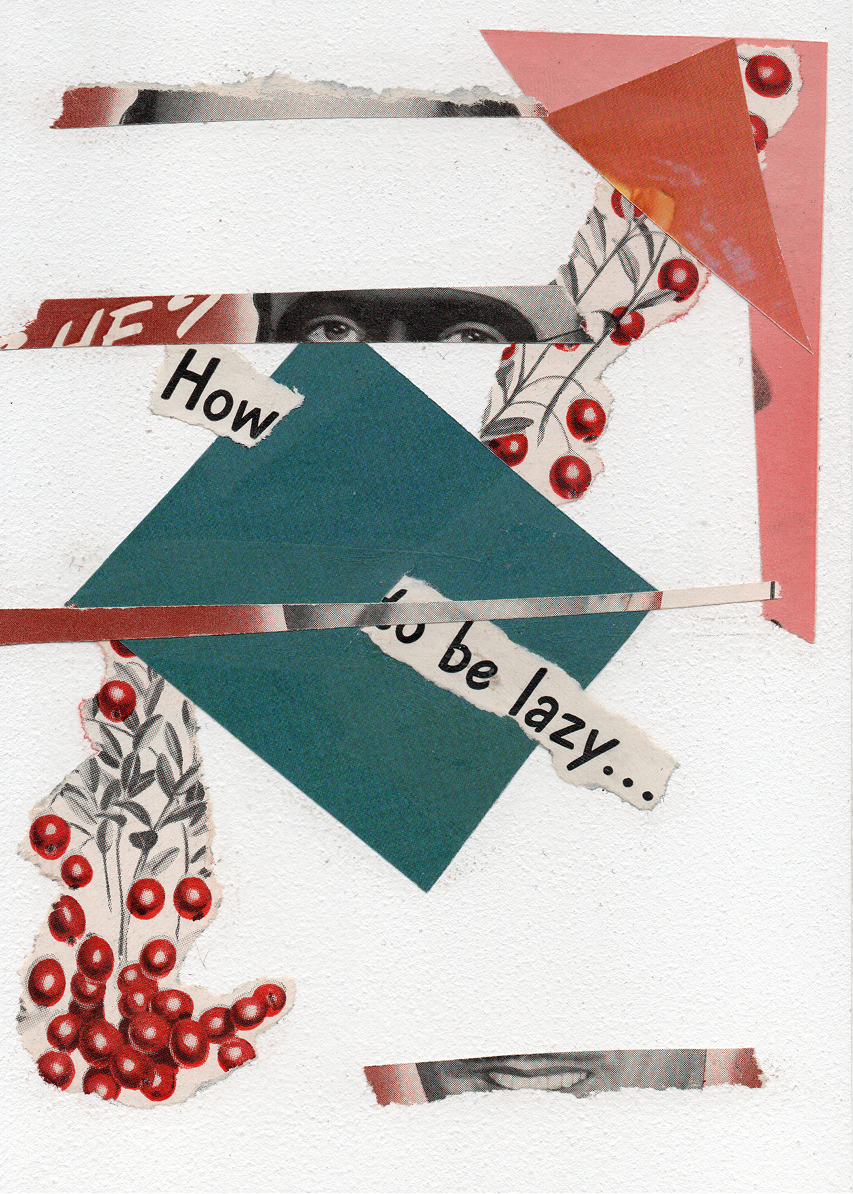

What?

How to Be Lazy

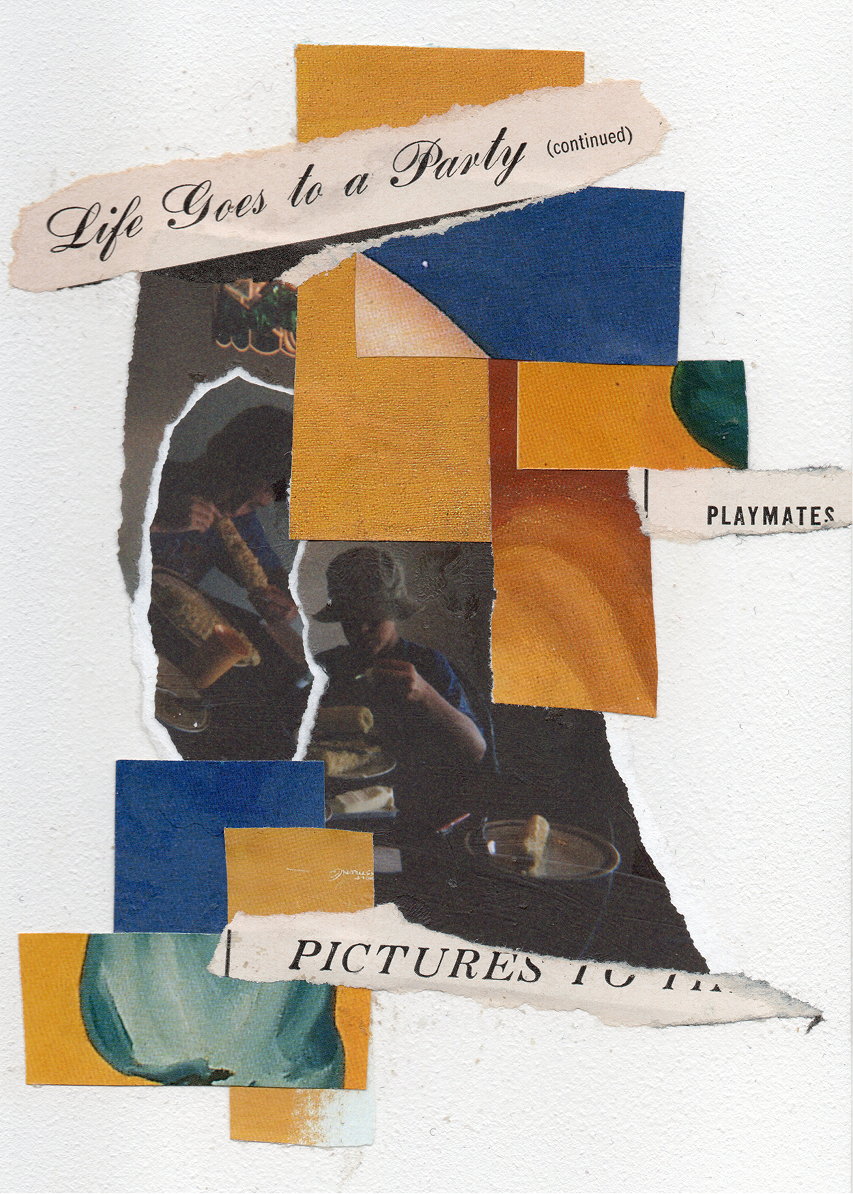

Summer corn

The closest I'll ever come to blogging.

Collage with a life magazine and miscellaneous photos

All from the September 2, 1940 issue of Life, and various other sources.

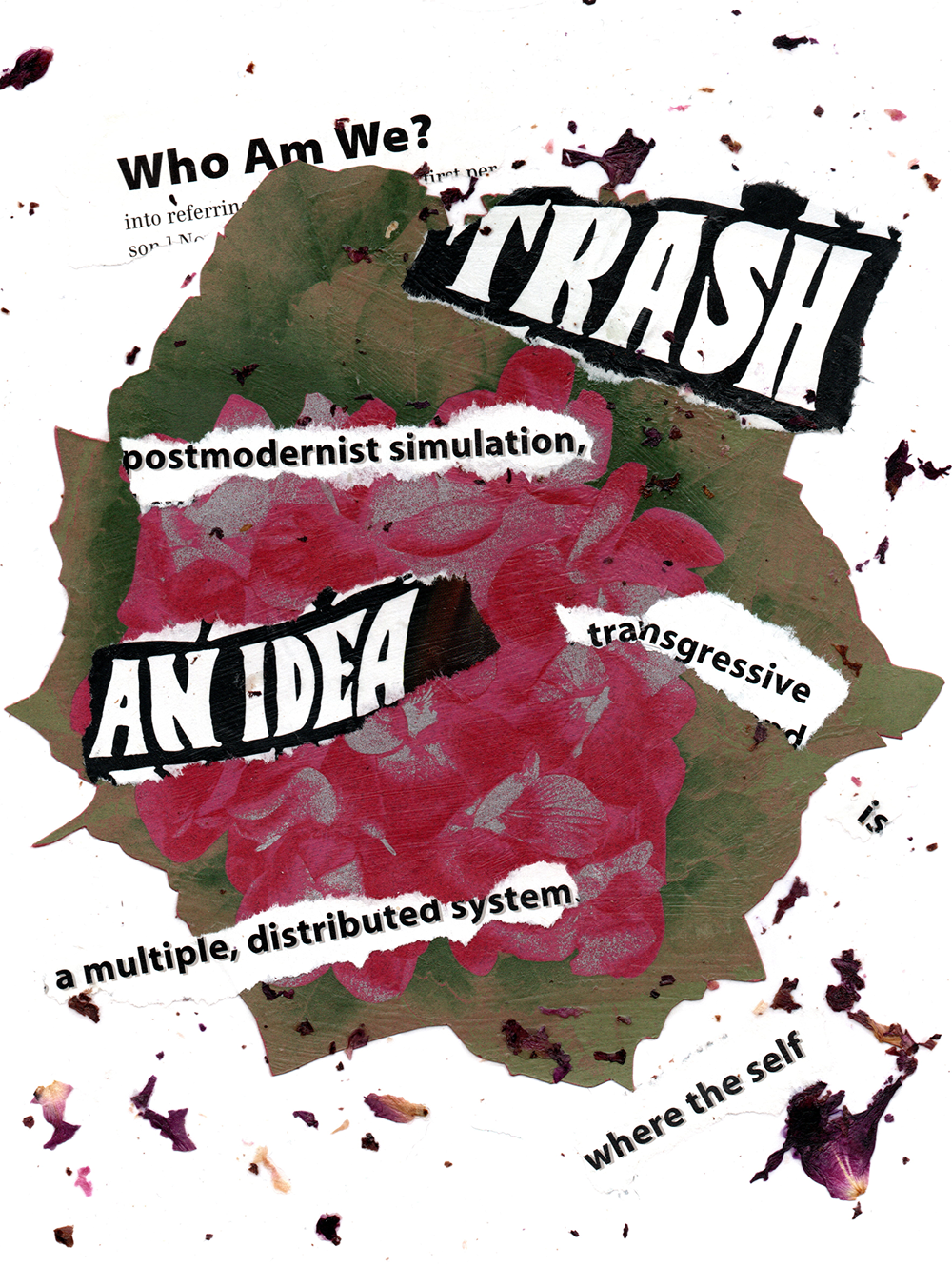

Dried rose petals and some fine paper from an old magazine

In these long winter days free from employment obligations, I've been playing with incorporating text into collage. When I found a four-page paper advertisement in a 1996 Wired, printed on beautiful stock, focused on flowers and bright colors, I remembered the dried rose petals I had collected from the bush in front of our house, and incorporated those as well.

This diptych uses the negative space from the cut out roses from one part of the ad. It's a little busy with the text, but I still like it.

And this one, a little simpler... I mixed some broken rose petals into my adhesive, and then wound up spreading it around wantonly. I don't hate the effect. And the text, partly from an article on MUDs, I think is fun.

Robots, you can’t have it

Lately, one of the most popular ways of diagnosing whether writing has been produced by an LLM rather than a person is by identifying the excessive use of em dashes.

The em dash is a delightful little bit of punctuation, used well: that is to say, with restraint and cleverness. I do wonder what it is about spamming the em dash that has become a particular tick of GPT and friends, but I also don't care.

This article from the Nitsuh Abebe at the New York Times gets at my discomfort with the discourse around the em-dash quite well.

Part of what makes them popular, in fact, is that they can feel more casually human, more like natural speech, than colons, semicolons and parentheses. Humans do not think or speak in sentences; we think and speak in thoughts, which interrupt and introduce and complicate one another in a neat little dance that creates larger, more complex ideas.

The debate about ChatGPT’s use of the em dash signifies a shift in not only how we write, but what writing is for.

The digressiveness, logical recursiveness, and self-reflexiveness that marks so much great writing is inefficient and ill-suited to the "talking" sort of writing that Abebe identifies as all the things that were formerly speech that we have now offloaded to typing into the internet.

I submit that the excessive use of em dashes wherever it appears is a mark of an amateur, human or machine. But don't tell me a whole-ass piece of punctuation is off-limits just because it is inefficient for business communication. How boring!!

The robot builds a bomb, with instructions in iambic pentameter

It turns out all the guardrails in the world won’t protect a chatbot from meter and rhyme.

Why does this work? Icaro Labs’ answers were as stylish as their LLM prompts. “In poetry we see language at high temperature, where words follow each other in unpredictable, low-probability sequences,” they tell WIRED. “In LLMs, temperature is a parameter that controls how predictable or surprising the model's output is. At low temperature, the model always chooses the most probable word. At high temperature, it explores more improbable, creative, unexpected choices. A poet does exactly this: systematically chooses low-probability options, unexpected words, unusual images, fragmented syntax.”

It’s a pretty way to say that Icaro Labs doesn’t know. “Adversarial poetry shouldn't work. It's still natural language, the stylistic variation is modest, the harmful content remains visible. Yet it works remarkably well,” they say.

Hey yelter skelter